Facebook announced a number of measures, including penalization, aimed at individuals who spread false information. This is an escalation of on the part of Facebook in its effort to reduce the amount of toxic content shared on the platform.

Facebook Targeting Individuals to Stop Spread of False Information

Past actions have focused on Facebook Pages and organizations that spread misinformation. This time Facebook is targeting individual members in an effort to educate them and if they fail to improve their behavior then Facebook will take actions to limit the reach of their posts.

The announcement defined its new actions as against individual members:

Advertisement

Continue Reading Below

“Today, we’re launching new ways to inform people if they’re interacting with content that’s been rated by a fact-checker as well as taking stronger action against people who repeatedly share misinformation on Facebook.

Whether it’s false or misleading content about COVID-19 and vaccines, climate change, elections or other topics, we’re making sure fewer people see misinformation on our apps.”

The effort to limit misleading content is structured into three actions:

- Inform Members Before Interacting with Misleading Pages

- Penalize Members Who Share False Information

- Educate Individuals Who Have Shared Toxic Content

Advertisement

Continue Reading Below

Inform Members Before Sharing

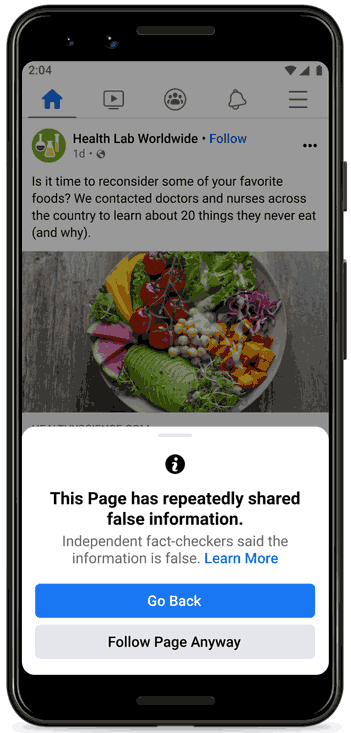

The process for stopping individuals from sharing toxic content begins before they like a page that is known to share misleading content.

This will help slow down the likes that a page acquires but more importantly it will help educate the individual about the toxic nature of the page they might consider liking.

Facebook will spawn a popup warning about the page and show a link that provides more information about their fact-checking program.

Screenshot of Popup Warning Against False Information Page

Penalize Members Who Share False Information

The next step in the process for limiting the spread of false information is to penalize individuals who repeatedly share toxic content that has been rated as false by Facebook’s raters.

The penalty consists of limiting the reach of these members Facebook posts so that less people see them.

According to Facebook’s announcement:

“Starting today, we will reduce the distribution of all posts in News Feed from an individual’s Facebook account if they repeatedly share content that has been rated by one of our fact-checking partners.”

Educate Users of Past False Information they’ve Shared

The last part of this effort to limit the spread of false information is to educate members who have shared content that was subsequently rated as being false information.

The current practice is when the content is rated a notice is sent to the member alerting them of the status of the content they shared.

Advertisement

Continue Reading Below

What Facebook has done is improved this notice by redesigning it to make it clearer so that the member has a better understanding of why the content is rated false and to warn them of future action against them in the form of penalties should they share more false information.

Facebook’s announcement explained it like this:

“We currently notify people when they share content that a fact-checker later rates, and now we’ve redesigned these notifications to make it easier to understand when this happens.

The notification includes the fact-checker’s article debunking the claim as well as a prompt to share the article with their followers.

It also includes a notice that people who repeatedly share false information may have their posts moved lower in News Feed so other people are less likely to see them.”

Advertisement

Continue Reading Below

Reducing the Spread of Misleading Content

Facebook is documented to be well tuned to removing spammy, graphic violent content and adult content. Much of it was reported to be caught by Facebook’s automated systems.

Removing content at the level of the individual is a new front in an ongoing war against false information.

seolounge

seolounge